On the AEM forum there’s an interesting question:

Does this mean that all requests to the page node are internally redirected to _jcr_content for HTML and XML calls but not for other extensions ?

Without answering the question right here, it raises the question: How is a cq:Page node actually resolved? Or how does Sling know, that it should resolve a call to a cq:Page node to the resourcetype specified in its jcr:content node? Especially since the “cq:Page” does not have a “sling:resourceType” property at all?

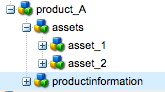

A minimal page can look like this (JSON dump):

{

"jcr:primaryType": "cq:Page",

"jcr:createdBy": "admin",

"jcr:created": "Mon Dec 03 2018 19:09:44 GMT+0100",

"jcr:content": {

"jcr:primaryType": "cq:PageContent",

"jcr:createdBy": "admin",

"cq:redirectTarget": "/content/we-retail/us/en",

"jcr:created": "Mon Dec 03 2018 19:09:44 GMT+0100",

"cq:lastModified": "Tue Feb 09 2016 00:05:48 GMT+0100",

"sling:resourceType": "weretail/components/structure/page",

"cq:allowedTemplates": ["/conf/we-retail/settings/wcm/templates/.*"]

}

}

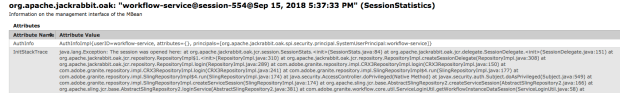

A good tool to understand how a page is rendered is the “Recent Requests” tool available in the OSGI webconsole (/system/console/requests).

When I request /content/we-retail.html and check this request in the recent requests tool, I get this result (reduced to the relevant lines):

1967 TIMER_END{65,ResourceResolution} URI=/content/we-retail.html resolves to Resource=JcrNodeResource, type=cq:Page, superType=null, path=/content/we-retail

1974 LOG Resource Path Info: SlingRequestPathInfo: path='/content/we-retail', selectorString='null', extension='html', suffix='null'

1974 TIMER_START{ServletResolution}

1976 TIMER_START{resolveServlet(/content/we-retail)}

1990 TIMER_END{13,resolveServlet(/content/we-retail)} Using servlet /libs/cq/Page/Page.jsp

1991 TIMER_END{17,ServletResolution} URI=/content/we-retail.html handled by Servlet=/libs/cq/Page/Page.jsp

The interesting part is here, that /content/we-retail resource has a type (= resource type) of “cq:Page”, and when we look down in the snippet, we see that it is resolved to /libs/cq/Page/Page.jsp; that means the resource type is actually “cq/Page”.

And yes, in case no resource type property is available, Sling’s first fallback strategy is to try the JCR nodetype as a resource type. And in case this fails as well, it goes back to the default servlets.

OK, now we have a point to investigate. The /libs/cq/Page/Page.jsp is very simple:

<%@include file="proxy.jsp" %>

And in the proxy.jsp there’s a call to the RequestDispatcher to include the jcr:content resource; and from there one the standard resolution starts which we all are used to.

And to get back to the forum question: If you look around in /libs/cq/Page, you can find more scripts to deal with extensions and also some selectors. And they all include only the call to the proxy.jsp. If your selector & extension combination do not work as expected, you might want to add an overlay there.

You must be logged in to post a comment.