This is part 5 and the final post of the blog post series about performance test modelling; see part 1 for an overview and the links to all articles of this series.

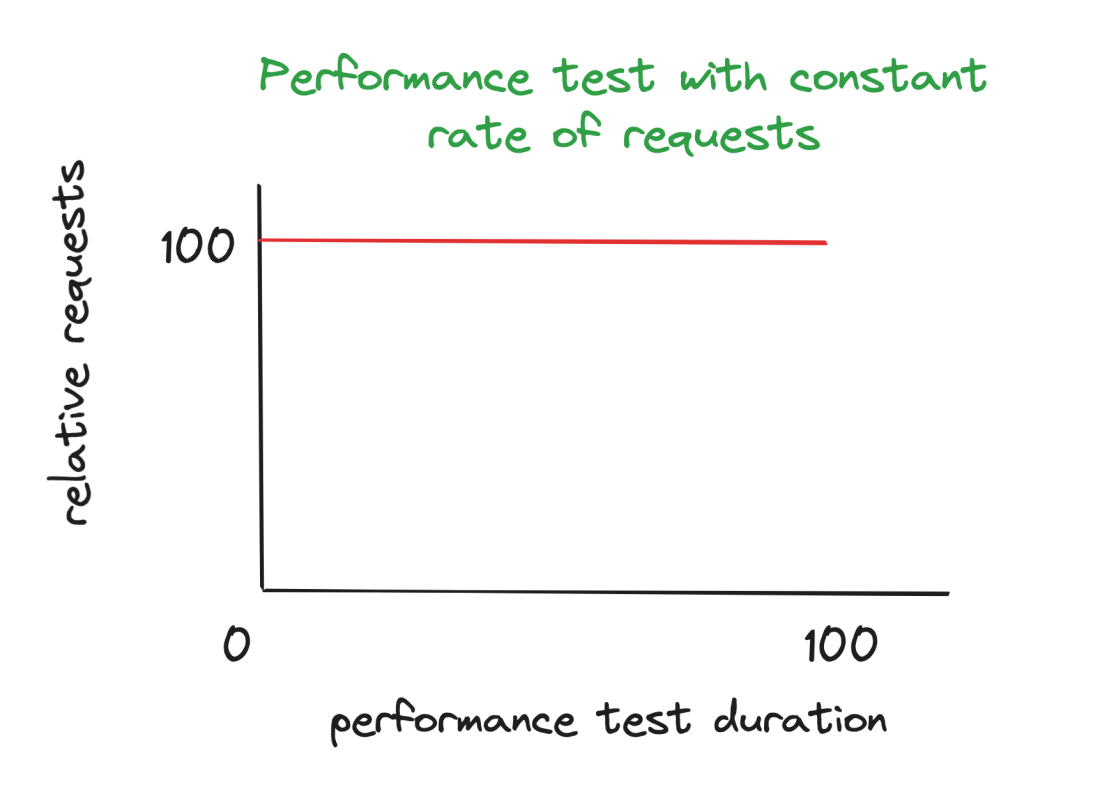

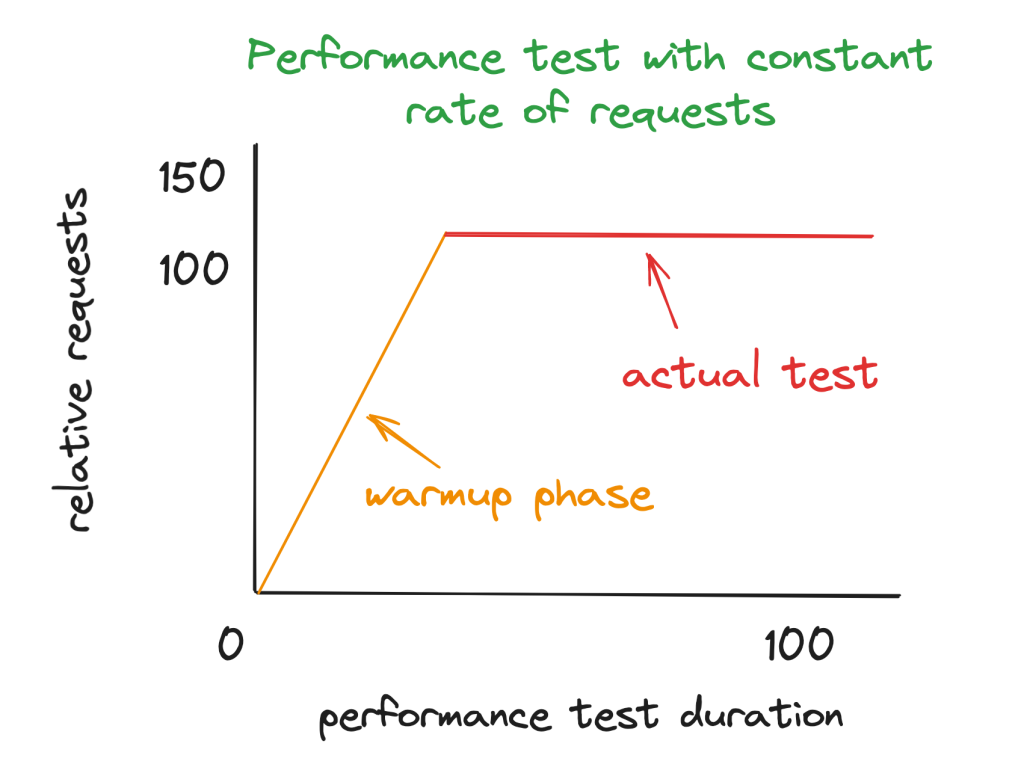

In the previous post I discussed the impact of the system which we test, how the modelling of the test and the test content will influence the result of the performance test, and how you implement the most basic scenario of the performance tests.

In this blog post I want to discuss the predicted result of a performance test and the actual outcome of it, and what you can do when these do not match (actually they rarely do on the first execution). Also I want to discuss the situation where after golive you encounter that a performance test delivered the expected results, but did not match the observed behavior in production.

The performance test does not match the expected results

In my experience every performance, no matter how good or bad the basic definition is, contains at least 2 relevant data points:

- the number of concurrent users (we discussed that already in part 1)

- and an expected result, for example that the transaction must be completed within N seconds.

What if you don’t meet the performance criteria in point 2? This is typically the time when customers in AEM as a Cloud Service start to raise questions to Adobe, about number of pods, hardware details etc, as if the problem can only be the hardware sizing on the backend. If you don’t have a clear understanding about all the implications and details of your performance tests, this often seems to be the most natural thing to ask.

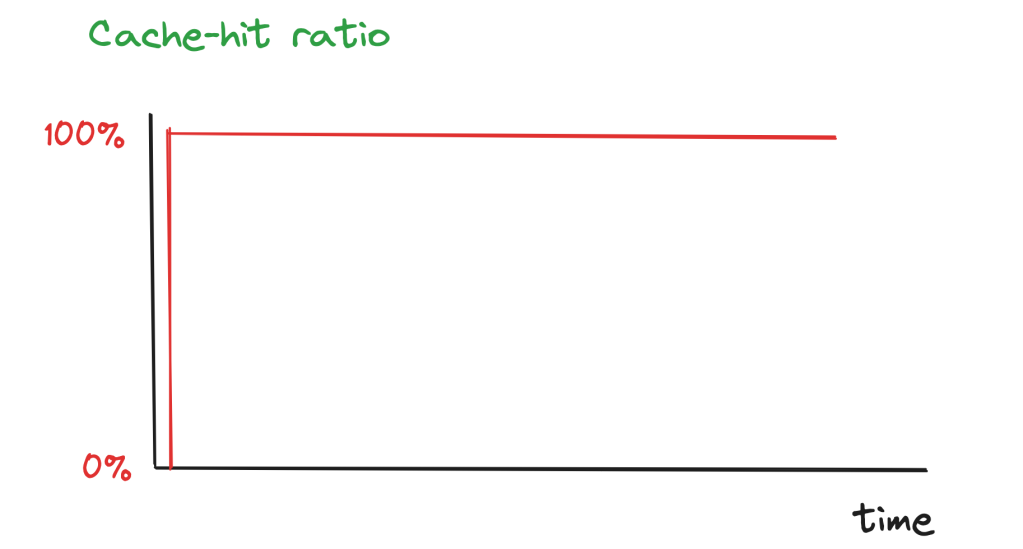

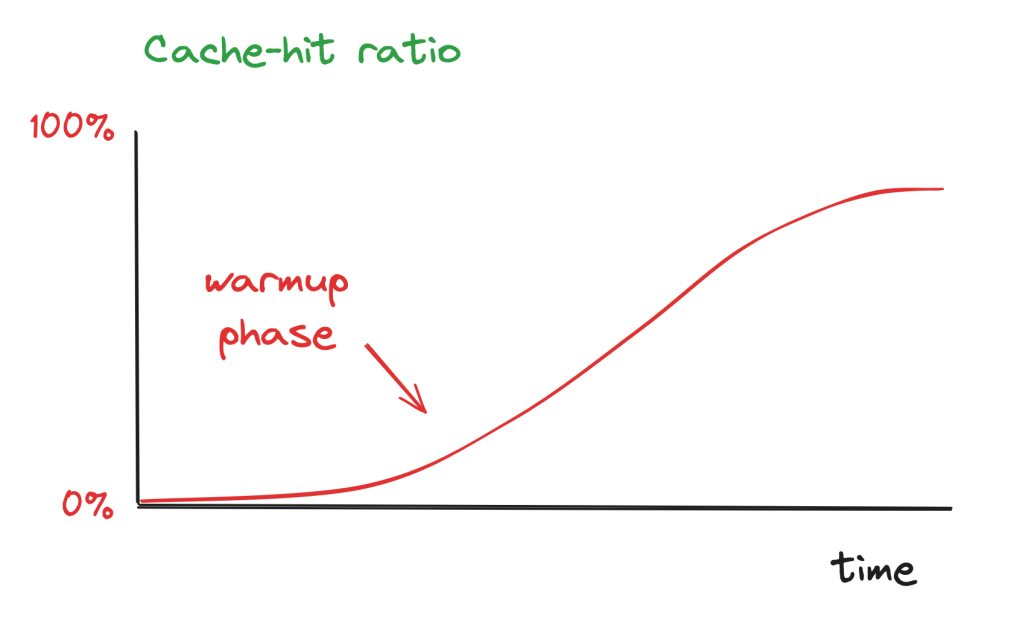

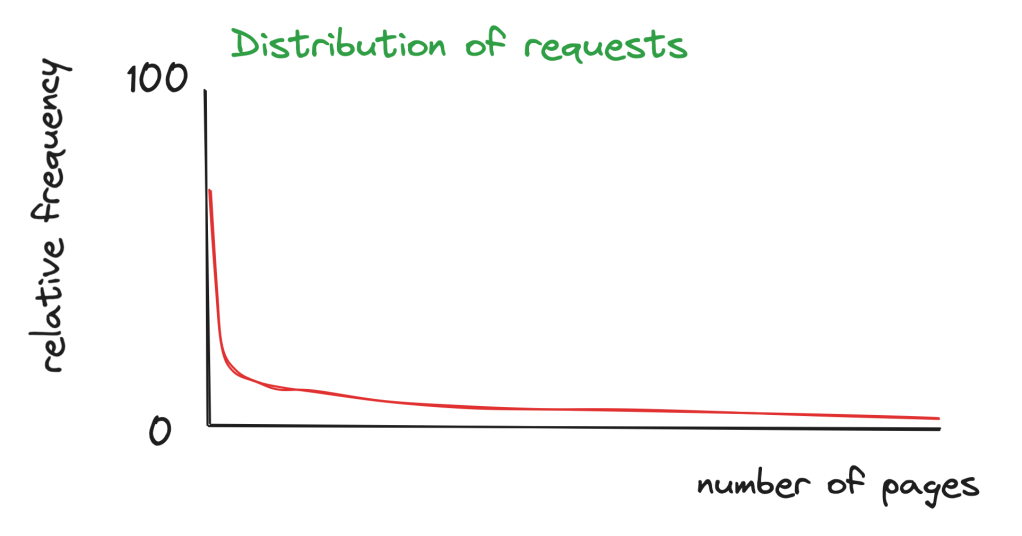

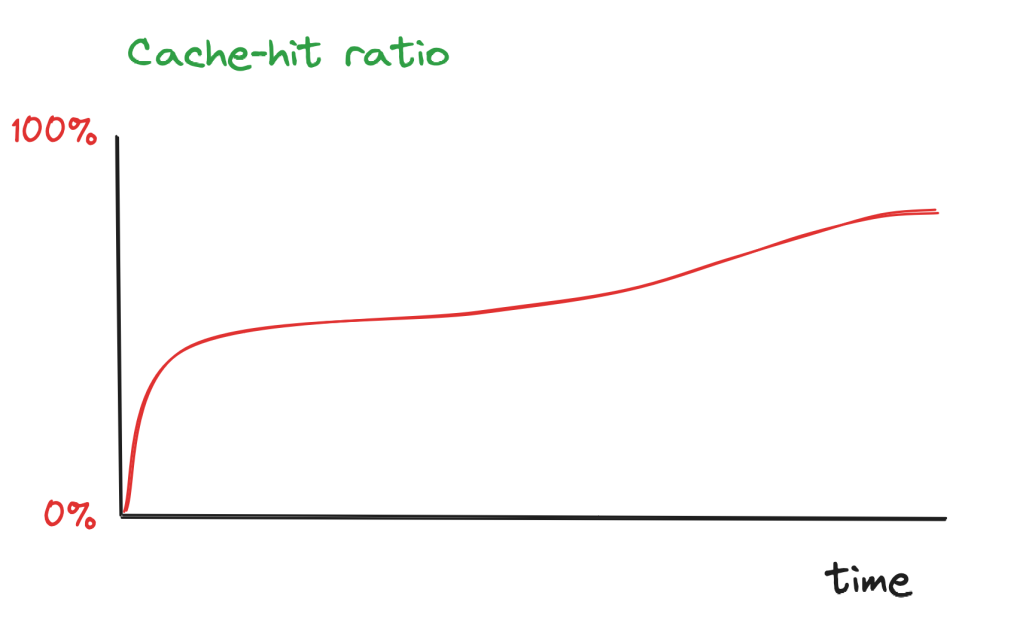

But if you have built a good model for your performance test, your first task should be to compare the assumptions with the results. Do you have your expected cache-hit ratio on the CDN? Were some assumptions in the model overly optimistic or pessimistic? As you have actual data to validate your assumptions you should do exactly that: go through your list of assumptions and check each one of them. Refine them. And when you have done that, modify the test and start another execution.

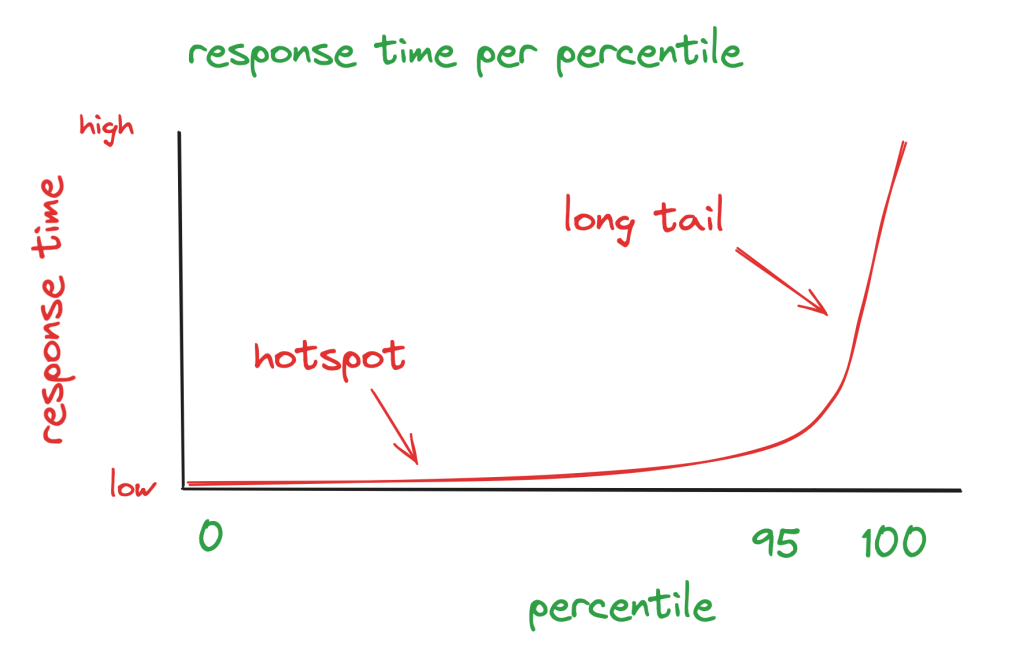

And at some point you might come to the conclusion, that all assumptions are correct, you have the expected cache-hit ratio, but the latency of the cache misses is too high (in which case the required action is performance tuning of individual requests). Or that you have already reduced the cache MISSES (and cache PASSES) to the minimum possible and that the backend is still not able to handle the load (in which case the expected outcome should be an upscale); or it can also be both.

That’s fine, and then it’s perfect to talk to Adobe, and share your test model, execution plan and the results. I wrote in part 1:

As you can imagine, if I am given just a few diagrams with test results and test statistics as preparation for this call with the customer … this is not enough, and very often more documentation about the test is not available. Which often leads to a lot of discussions about some very basic things and that adds even more delay to an already late project and/or bad customer experience.

But in this situation, when you have a good test model and have done your homework already, it’s possible to directly have a meaningful discussion without the need to uncovering all the hidden assumptions. Also, if you have that model at hand, I assume that performance tests are not an afterthought, and that there are still reasonable options to do some changes, which will either completely fix the situation or at least remediate the worst symptoms, without impacting the go-live and the go-live date too much.

So while this is definitely not the outcome we all work, design, build and ultimately hope for, it’s still much better than the 2nd option below.

I hope that I don’t need to talk about unrealistic expectations in your performance tests, for example delivering a p99,9 with 200 ms latency, while at the same time requiring a good number of requests always be handled by the AEM backend. You should have detected these unrealistic assumptions much earlier, mostly during design and then in the first runs during the evolution phase of your test.

Scenario 2: After go-live the performance is not what it’s supposed to be

In this scenario a performance test was either not done at all (don’t blame me for it!) or the test passed, but the results of the performance tests did not match the observed reality. This often shows up as outages in production or unbearable performance for users. This is the worst case scenario, because everyone assumed the contrary as the performance test results were green. Neither the business nor the developer team are prepared for it, and there is no time for any mitigation. This normally leads to an escalated situation with conference calls, involvement from Adobe, and in general a lot of stress for all parties.

The entire focus is on mitigation, and we ( I am speaking now as a member of the Adobe team, who is often involved in such situations) will try to do everything to mitigate that situation by implementing workarounds. As in many cases the most visible bottleneck is on the backend side, upscaling the backend is indeed the first task. And often this helps to buy you some time to perform other changes. But there are even cases, where an upscale of 1000% would be required to somehow mitigate that situation (which is possible, but also very short-lived, as every traffic spike on top will require additional 500% …); also it’s impossible to speed up the latency of a single-threaded request of 20 seconds by adding more CPU. These cases are not easy to solve, and the workaround often takes quite some time, and is often very tailored; and there cases where a workaround is not even possible. In any way it’s normally not a nice experience for no-one of the involved parties.

I refer to all of these actions as “workaround“. In bold. Because they are not not the solution to the challenge of performance problems. They cannot be a solution because this situation proves that the performance test was testing some scenarios, but not the scenario which shows in the production environment. It also raises valid concerns on the reliability of other aspects of the performance tests, and especially about the underlying assumptions. Anyway, we are all trying to do our best to get the system back to track.

As soon as the workarounds are in place and the situation is somehow mitigated, 2 types of questions will come up:

- How does a long-term solution look like?

- Why did that happen? What was wrong with the performance test and the test results?

While the response to (1) is very specific (and definitely out of scope of this blog post), the response to (2) is interesting. If you have a good documented performance test model you can compare its assumptions with the situation in which the production performance problem happened. You have the chance to spot the incorrect or missing assumption, adjust your model and then the performance test itself. And with that you should be able to reproduce your production issue in a performance test!

And if you have a performance failing test, it’s much easier to fix the system and your application, and apply some specific changes which fix this failed test. And it gives you much more confidence that you changed the right things to make the production environment handle the same situation again in a much better way. Interestingly, this gives also to some large extent the response to the question (1).

If you don’t have such a model in this situation, you are bad off. Because then you either start building the performance test model and the performance test from scratch (takes quite some time), or you switch to the “let’s test our improvements in production” mode. Most often the production testing approach is used (along with some basic testing on stage to avoid making the situation worse), but even that takes time and a high number of production deployments. While you can say it’s agile, other might say it’s chaos and hoping for the best… the actual opposite of good engineering practice.

Summary

In summary, when you have a performance test model, you are more likely to have less problems when your system goes live. Mostly because you have invested time and thoughts in that topic. And because you acted on it. It will not prevent you from making mistakes, forgetting relevant aspects and such, but if that happens you have a good basis to understand quickly the problem and also a good foundation to solve them.

I hope that you learned in these posts some aspects about performance tests which will help you to improve your test approach and test design, so you ultimately have less unexpected problems with performance. And if you have less problems with that, my life in the AEM CS engineering team is much easier 🙂

Thanks for staying with me for throughout this first planned series of blog posts. It’s a bit experimental, although the required structure in this topic led to some interesting additions on the overall structure (the first outline just covered 3 posts, now we are at 5). But I think that even that is not enough, I think that some aspects deserve a blog post on their own.

You must be logged in to post a comment.