TL;DR Be cautious when implementing interfaces provided by libraries, you can get problems when these libraries are updated. Check for the @ProviderType and @ConsumerType annotations of the Java interfaces you are using to make sure that you don’t limit yourself to a specific version of a package, as sooner or later this will cause problems.

One of the principles of object-oriented programming is the encapsulation to hide any implementation details. Java uses interfaces as a language feature to implement this principle.

OSGI uses a similar approach to implement services. An OSGI service offers its public API via a Java interface. This Java interface is exported and therefor it is visible to your Java code. And then you can use it how it is taught in every AEM (and modern OSGI) class like this:

@Reference

UserNotificationService service;

With the magic of Declarative Service a reference to an implementation of UserNotificationService is injected and you are ready to use it.

But if that interface is visible and with the power of Java at hand, you can create an instance of that class on your own:

public class MyUserNotificationService implements UserNotificationService {

...

}

Yes, this is possible and nothing prevents you from doing it. But …

Unlike Object-oriented programming, OSGI has some higher aspirations. It focuses on modular software, dedicated bundles, which can have an independent lifecycle. You should be able to extend functionality in a bundle without the need that all other code in other bundles needs to be recompiled. So a binary compatibility is important.

Assuming that the framework you are using comes with the UserNotificationService which like this

package org.framework.user;

public interface UserNotificationService {

void notifyUserViaPopup (User user, NotificationContent notification);

}

Now you decide to implement this interface in your own codebase (hey, it’s public and Java does not prevent me from doing it) and start using it in your codebase:

public class MyUserNotificationService implements UserNotificationService {

void notifyUserViaPopup (User user, NotificationContent notification) {

..

}

}

All is working fine. But then the framework is adjusted and now the UserNotificationService looks like this:

package org.framework.user;

public interface UserNotificationService { // version 1.1

void notifyUserViaPopup (User user, NotificationContent notification);

void notifyUserViaEMail (User user, NotificationContent notification);

}

Now you have a problem, because MyUserNotificationService is no longer compatible to the UserNotificationService (version 1.1), because MyuserNotificationService does not implement the method notifyUserViaEmail. Most likely you can’t load your new class anymore, triggering interesting exceptions. You would need to adjust MyUserNotificationService and implement the missing method to make it run again, even if you would never need the notifyUserViaEmail functionality.

So we have 2 problems with that approach:

- It will be only detected on runtime, which is too late.

- You should not be required to adapt your code to changes in the other of some one else, especially if this is just an extension of the API you are not interested in at all.

OSGI has a solution for 1, but only some helpers for (2). Let’s check first the solution for (1).

Package versions and references

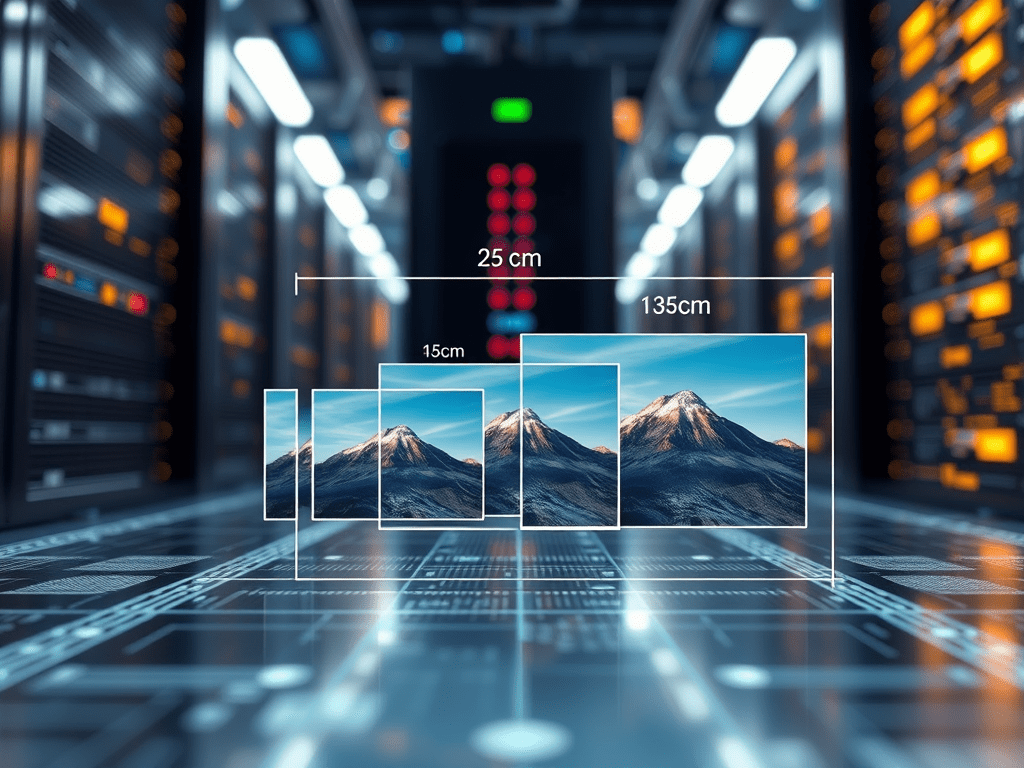

OSGI has the notion of “package version” and it’s best practice to provide version numbers for API packages. That means you start with a version “1.0” and and people start to use it (using service references). And when you make a compatible change (like in the example above you add a new method to the service interface) you increase the package version by a minor version to 1.1 and all existing users can still reference this service, even if their code was never compiled against the version 1.1 of the UserNotificationService. This is backwards-compatible change. If you are making a backwards-incompatible change (e.g removing a method from the service interface), you have to increase the major version to 2.0.

When you build your code and use the bnd-maven-plugin (or the maven-bundle-plugin) the plugin will automatically calculate the import range on the versions and store that information in the target/classes/META-INF/MANIFEST.MF. If you just reference services, the import range can be wide like this:

org.framework.user;version=([1.0,2)

which translates to: This bundle has a dependenty to the package org.framework.user with a version equal or higher than 1.0, but lower than (excluding) 2. That means that a bundle with this import statement will resolve with package org.framework.user 1.1. If you OSGI environment only exports org.framework.user in version 2.0, your bundle will not resolve.

(Much more can be written in this aspect, and I simplified a lot here. But the above part is the important part when you are working with AEM as a consumer of the APIs provided to you.)

Package versions and implementing interfaces

The situation gets tricky, when you are implementing exported interfaces. Because that will lock you to a specific version of the package. If you implement the MyUserNotificationService as listed above, the plugins will calculate the import range like this:

org.framework.user;version=([1.0,1.1)

This will basically lock you to that specific version 1.0 of the package. While it does not prevent changes to the implementation of any implementations of the UserNotificationService in your framework libraries, it will prevent any change to the API of it. And not only for the UserNotificationService, but also for all other classes in the org.framework.user package.

But sometimes the framework requires you to implement interfaces, and these interfaces are “guaranteed” to not change by the developers of it. In that case the above behavior does not make sense, as a change to a different class in the same package would not break any binary compatibility for these “you need to implement these interface” classes.

To handle this situation, OSGI introduced 2 java annotations, which can added to such interfaces and which clearly express the intent of the developers. They also influence the import range calculation.

- The @ProviderType annotation: This annotation expresses that the developer does not want you to implement this interface. This interface is purely meant to be used to reference existing functionality (most likely provided by the same bundle as the API); if you implement such an interface, the plugin will calculate a a narrow import range.

- The @ConsumerType annotation: This annotation shows the intention of the developer of the library that this interface can be implemented by other parties as well. Even if the library ships an implementation of that service on its own (so you can @Reference it) you are free to implement this interface on your own and register it as a service. If you implement such an interface with this annotation, the version import range will be wide.

In the end your goal should be not to have a narrow import version range for any library. You should allow your friendly framework developers (and AEM) to extend existing interfaces without breaking any binary compatibility. And that also means that you should not implement interfaces you are not supposed to implement.

You must be logged in to post a comment.